The Conundrum of Autonomous Cars

Are autonomous cars really the technology of the future?

February 21, 2016

I would like to start off with an apology: as you may have (but probably have not) noticed, I have not contributed to this newspaper in a solid, oh, nine months or so. The reason? Well, erm, there isn’t one. Sorry. Not much of an excuse. I understand that the head of our newspaper, Mr. David Pascone, would have loved to hear that I have been focusing on my papers for English, and that I have refined my style of writing such that it is on par with that of a literary god. That didn’t happen. I also have a hunch that our editor and captain of the cross-country team, Sawyer Balint, would have accepted my laziness had it been caused by my dedication to running, and the deep passion that I (don’t really) have for it. Well, that wasn’t the reason either. So, once again, sorry.

But, hey, this is a newspaper, so I have to type some words on a computer that will be read by you – yes, you! Which brings me, not so neatly, to autonomous cars.

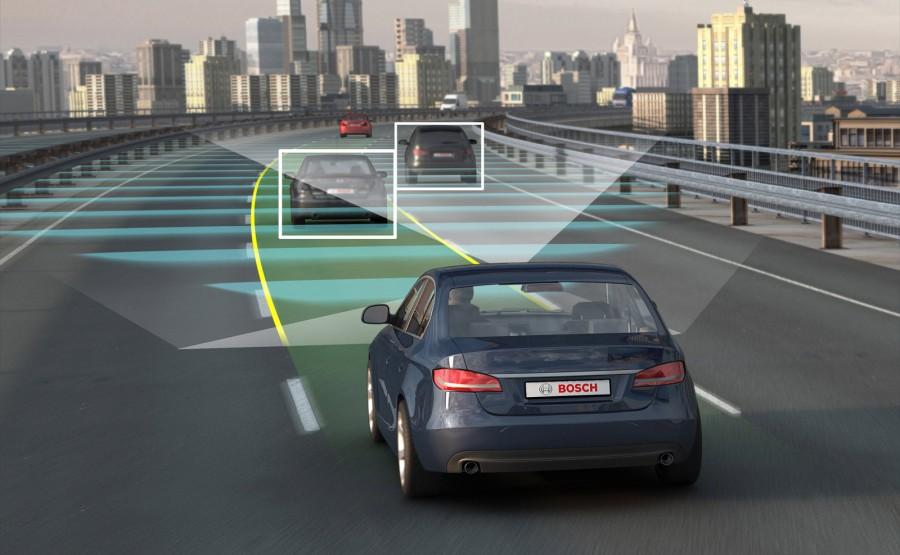

Autonomous cars promise to give peace and stability to the increasingly dangerous roads of the world. By offering drivers the opportunity to let go of the wheel, read texts, send emails, and, if they are feeling ambitious, eat their breakfast, this new form of automobile is being hailed as the future.

But is it?

There are many flaws with autonomous cars, whether it be inadequate software, sensors, maps; however, it boils down to a simple question: Can cars make life-or-death decisions? I argue that no, they cannot. First, consider that these types of decisions have to take into account conflicting ideas of morality, as well as the different virtues that different people hold to be true. I won’t elaborate too much, but think of it in terms of a scenario: say you are driving to work (or rather, being driven to work by your autonomous car) and, out of nowhere, a truck comes flying straight towards you down the one-way street you are on. To avoid getting killed, your car can swerve to the left and hit a group of elderly people, serve to the right and hit a mother and her young child, or just keep going straight and get you killed. The idea that a string of code is in charge of the futures of you and those around you necessitates that we consider the moral implications of creating these autonomous vehicles.

The situation, though fairly unlikely, is thought-provoking. In such a predicament, a CPU and some software have to make a decision that most philosophers could never make with full confidence. Is this right? I say no. Thus far, software has been taught to perform certain tasks based on commands that are already programmed by the developer. Like the worst student in the world, it memorizes its way through different problems and then acts based on what it knows, rather than making its own applied decisions. This is worrying. What if the person who programmed the car forgot to put in a key command that is vital to the well-being of the occupants of the car and the people outside the car? This happens all the time, at least with more accessible technology, like phones. This time, however, instead of not being able to send a text, you won’t be able to stop before you hit the tree that seems to be coming towards you rather quickly.

I suppose that my point is that autonomous cars, though promising, should not be used unless all their major niggles can be sorted. It is not like me to denounce new technological advancements, but I simply cannot live with the idea that eventually the cars moving around me will not be controlled by a person, but by an emotionless, unfeeling computer. Being (mostly) unable to control the autonomous vehicle is, at least, troubling, and demands second thoughts about what we want from the future of the autonomous vehicle. The entire concept of autonomous transportation is fundamentally flawed, and I urge you to not support it. After all, who doesn’t like a nice Sunday drive?